From Babbage's Analytical Engine to Modern AI

Exploring the Revolutionary World of Computer Technology

- The Evolution of Computers: A Comprehensive Journey from Charles Babbage to AI and Beyond

- Introduction

- Charles Babbage: The Father of the Computer

- The First Electronic Computers and Their Role in Code-Breaking

- Transistors, Integrated Circuits, and Microprocessors: A Trilogy of Innovations in Computing

- The Birth of Personal Computers

- The Rise of the Internet and the World Wide Web

- The Foundations of the Internet: Technologies and Collaborative Movements

- Artificial Intelligence and Machine Learning

- The Future of Computing

- Conclusion

- Faq

- Pros and Cons

- Resources

- Related Articles

The Evolution of Computers: A Comprehensive Journey from Charles Babbage to AI and Beyond

Introduction

The history of computers is a fascinating tale of innovation, ingenuity, and vision. Over the years, computing technology has evolved dramatically, transforming our lives and shaping the world we live in today. In this extensive blog post, we will delve deeper into the most significant moments in the evolution of computers, from Charles Babbage's early concepts to the rise of artificial intelligence, and explore the groundbreaking developments on the horizon. By understanding this history, we can better appreciate the technological advancements we enjoy today and anticipate the innovations that will define the future.

Charles Babbage: The Father of the Computer

Charles Babbage (1791-1871) was a visionary English mathematician, inventor, and mechanical engineer who is often credited as the "father of the computer" for his groundbreaking ideas that laid the foundation for modern computing. Babbage was born in London and studied at Trinity College, Cambridge, where he excelled in mathematics and became a fellow of the Royal Society.

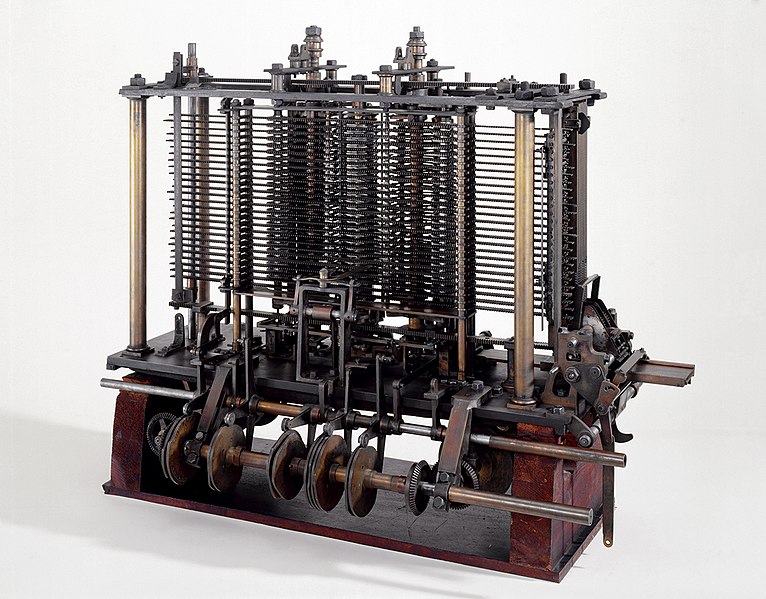

Babbage's most famous creation, the Analytical Engine, was conceived in the mid-19th century. The machine was designed to be a general-purpose computing device capable of performing complex calculations using punched cards for input and output. The Analytical Engine incorporated several revolutionary concepts, such as programmability and the separation of storage and processing, which served as the blueprint for future computer architectures.

In addition to the Analytical Engine, Babbage also designed the Difference Engine, an earlier mechanical calculator intended to compute mathematical tables. Although Babbage received funding from the British government to build the Difference Engine, he never completed the project due to cost overruns and disagreements with his chief engineer. However, his work on the Difference Engine and the Analytical Engine left an indelible mark on the history of computing, demonstrating the potential of mechanical computation and inspiring future generations of computer scientists.

Ada Lovelace, a gifted mathematician and the daughter of the poet Lord Byron, collaborated with Babbage on the development of the Analytical Engine. She is recognized as the world's first computer programmer for her work on developing algorithms to be processed by the machine, illustrating the potential applications of the Analytical Engine beyond pure calculation.

Charles Babbage, CC BY-SA 2.0, via Wikimedia Commons

The First Electronic Computers and Their Role in Code-Breaking

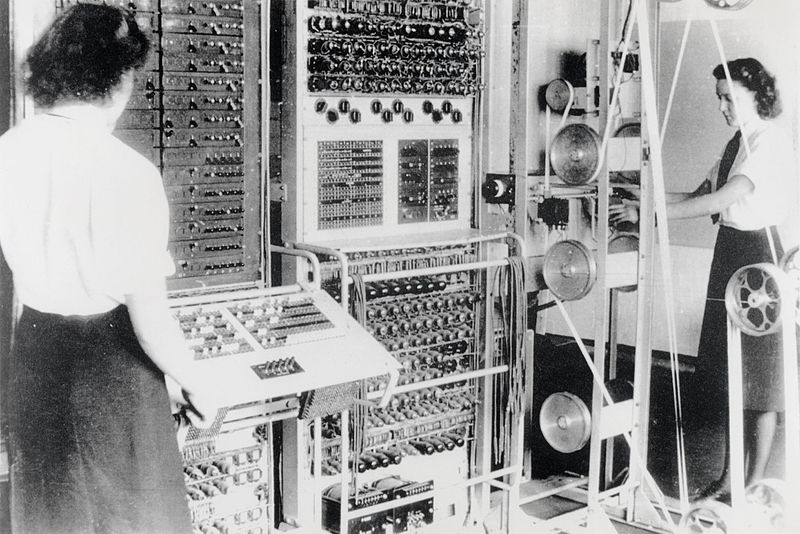

During World War II, electronic computers played a crucial role in code-breaking efforts, which significantly impacted the course of the war. The development of machines like the Colossus and the ENIAC showcased the power of electronic computing and the potential of using vacuum tubes for processing.

Colossus and Bletchley Park

The Colossus, designed by British engineer Tommy Flowers, was the world's first programmable digital computer. It was instrumental in breaking encrypted messages from the German military, particularly the Lorenz cipher used by high-ranking officials. The Colossus was developed and deployed at Bletchley Park, a top-secret code-breaking facility in the UK, where a team of talented mathematicians and engineers, including Alan Turing, worked tirelessly to decrypt Axis communications. Turing's work on the Bombe machine, which helped to crack the Enigma code, is another noteworthy example of the significant role of computers in code-breaking during World War II.

ENIAC and its Applications

The Electronic Numerical Integrator and Computer (ENIAC), built by John W. Mauchly and J. Presper Eckert in the US, was the first general-purpose electronic computer capable of solving a wide range of numerical problems. While initially developed to calculate artillery firing tables, the ENIAC's applications expanded to include cryptography, weather prediction, and atomic energy calculations. The success of these machines highlighted the importance of computers in modern society and paved the way for further advancements in computer technology.

See page for author, Public domain, via Wikimedia Commons

Transistors, Integrated Circuits, and Microprocessors: A Trilogy of Innovations in Computing

1. The Advent of Transistors

The trajectory of computer technology took a radical turn in 1947 with the invention of the transistor by John Bardeen, Walter Brattain, and William Shockley. Serving as a substantial upgrade from vacuum tubes, transistors brought about a host of advantages. Their compact size, superior energy efficiency, and greater reliability spurred the creation of faster and more powerful computing machines.

2. The Leap to Integrated Circuits

Following on the heels of transistors, the late 1950s saw the inception of integrated circuits, a brainchild of Jack Kilby and Robert Noyce. This innovation amplified the miniaturization trend in computing by housing thousands of transistors within a single silicon chip. The advent of integrated circuits substantially boosted processing power, setting the foundation for the contemporary computers we're accustomed to today.

3. Microprocessors: The Game-Changer

The early 1970s marked another pivotal moment in the evolution of computing with the emergence of microprocessors. These devices integrated the Central Processing Unit (CPU) and other critical components onto a solitary chip, propelling computer technology's advancement at an unprecedented pace. This milestone in miniaturization and integration was instrumental in shaping the current landscape of computing.

From left: John Bardeen, William Shockley and Walter Brattain, the inventors of the transistor, 1948. Although Shockley was not involved in the invention, Bell Labs decided that he must appear on all publicity photos along with Bardeen and Brattain. AT&T; photographer: Jack St. (last part of name not stamped well enough to read), New York, New York., Public domain, via Wikimedia Commons

The Birth of Personal Computers

The first personal computers, like the Altair 8800, Apple I, and IBM PC, emerged in the 1970s and early 1980s. These machines brought computing into homes and businesses, making technology more accessible to the general public.

Altair 8800

Released in 1975, the Altair 8800 is often considered the first personal computer. It inspired a generation of computer enthusiasts and led to the formation of Microsoft, with Bill Gates and Paul Allen developing the first programming language for the Altair.

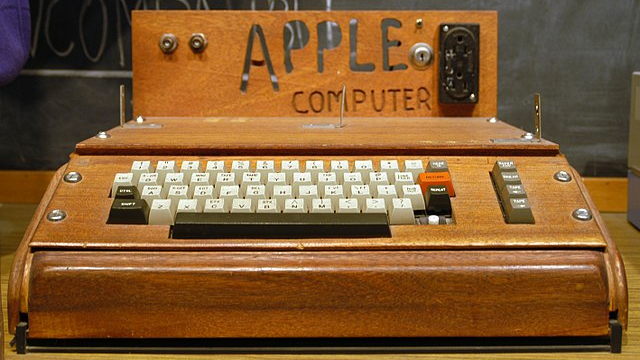

Apple I

In 1976, Steve Wozniak and Steve Jobs introduced the Apple I, which simplified the process of assembling a personal computer and made computing more user-friendly.

IBM PC

The introduction of the IBM PC in 1981 marked a turning point in the personal computer industry, with its standardized architecture allowing for greater compatibility between hardware and software components.

Swtpc6800 en:User:Swtpc6800 Michael Holley, Public domain, via Wikimedia Commons

Ed Uthman, CC BY-SA 2.0, via Wikimedia Commons

The Rise of the Internet and the World Wide Web

The development of the internet and the World Wide Web in the late 20th century had a profound impact on the way computers were used and how information was shared. The internet, a global network of interconnected computers, enabled instantaneous communication and the sharing of data across vast distances. The World Wide Web, invented by Tim Berners-Lee in 1989, provided a user-friendly interface to access and navigate the vast resources available on the internet.

Web Browsers

The creation of web browsers, such as Mosaic and Netscape Navigator, made it even easier for everyday users to access information on the World Wide Web, fueling the rapid growth of the internet and its increasing influence on society.

Everyday Internet Technologies

The internet has given rise to numerous communication tools and technologies that have become an integral part of our daily lives, including email, instant messaging, video calls, and bulletin board systems (BBS). These technologies have transformed the way we interact, collaborate, and share information with one another.

The Foundations of the Internet: Technologies and Collaborative Movements

The Building Blocks: Technologies and Protocols

The seamless functionality of the internet is anchored by a myriad of underlying technologies and protocols. Among these, the Transmission Control Protocol/Internet Protocol (TCP/IP) stands out as a vital cornerstone. It provides the framework for data transmission across the network, effectively serving as the internet's backbone.

Complementing these protocols are hardware components such as routers and switches. These devices act as the conductors of the digital symphony, orchestrating the flow of data between computers. Their role is integral in ensuring that information is transmitted and received accurately and efficiently, thereby maintaining the harmony of the network.

The Power of Collaboration: The Open Source Movement and GPL License

The internet's rise has been a major driving force behind the expansion and significance of the open-source movement. This movement champions a cooperative approach to software development, advocating for the sharing of source code and knowledge among developers.

At the heart of this collaborative ethos is the General Public License (GPL), a creation of Richard Stallman and the Free Software Foundation (FSF). The GPL is a widely adopted free software license that upholds the right to use, modify, and distribute software, ensuring these freedoms are preserved for all users.

Bolstered by the GPL and FSF, the open-source movement has been instrumental in shaping the internet's development. It has stimulated the creation of key technologies and platforms, such as the Linux operating system, the Apache web server, and countless programming languages and libraries. By fostering collaboration and resource sharing, the open-source movement has fueled innovation and sculpted the internet as we know and use it today.

Artificial Intelligence and Machine Learning

The field of artificial intelligence (AI) has been a core part of computer science since its inception, with the goal of creating machines capable of thinking and learning like humans. Advances in AI have led to the development of machine learning algorithms, which enable computers to learn from data and improve their performance over time. Today, AI and machine learning technologies are used in a wide range of applications, from natural language processing and image recognition to autonomous vehicles and medical diagnosis.

The Future of Computing

As we look to the future, several emerging trends and technologies promise to reshape the landscape of computing even further:

Quantum Computing

Quantum computing represents a radical departure from classical computing, offering a new paradigm for processing and analyzing data by leveraging the principles of quantum mechanics. This cutting-edge approach has the potential to solve problems that are currently intractable for classical computers, such as breaking modern cryptographic systems and simulating complex molecular structures for drug discovery.

Edge Computing

Edge computing is an emerging trend that brings processing power closer to the source of data, often on the periphery of the network. This shift has the potential to improve efficiency and reduce latency, enabling real-time processing and analysis for applications like the Internet of Things (IoT), augmented reality, and autonomous vehicles.

Brain-Computer Interfaces

Brain-computer interfaces (BCIs) involve the direct communication between the human brain and computers, enabling thought-controlled devices and unlocking new possibilities for human-computer interaction. These technologies have the potential to revolutionize the way we interact with computers and could have significant implications for accessibility, rehabilitation, and beyond.

Conclusion

The evolution of computers has been marked by remarkable breakthroughs and transformative technologies, shaping the world we live in today. As we look to the future, the possibilities are both thrilling and challenging. From quantum computing to brain-computer interfaces, the next frontier of computing holds the potential to revolutionize our lives and redefine what's possible. By understanding the history of computers, including the pivotal role of code-breaking efforts during World War II, the development of everyday internet technologies and their underlying infrastructure, and the influence of the open-source movement and the GPL license, we can better appreciate the extraordinary journey that has led us to this point and eagerly anticipate the innovations that lie ahead.

Propose to a man any principle, or an instrument, however admirable, and you will observe the whole effort is directed to find a difficulty, a defect, or an impossibility in it. If you speak to him of a machine for peeling a potato, he will pronounce it impossible: if you peel a potato with it before his eyes, he will declare it useless because it will not slice a pineapple.

Faq

- Q: Who is considered the father of the computer?

A: Charles Babbage is often regarded as the father of the computer due to his work on the Analytical Engine. - Q: What was the first electronic computer used for?

A: The first electronic computer, the Colossus, was used for code-breaking during World War II. - Q: How did the invention of transistors impact computer technology?

A: Transistors revolutionized computing by being smaller, more energy-efficient, and more reliable than vacuum tubes. - Q: What are some examples of everyday internet technologies?

A: Email, instant messaging, video calls, and bulletin board systems are common internet technologies. - Q: What emerging technologies are shaping the future of computing?

A: Quantum computing, edge computing, and brain-computer interfaces are some of the emerging technologies in the field.

Pros and Cons

Pros:

- Increased Productivity and Efficiency: Technological advancements in computing have streamlined work processes across all sectors.

- Revolution in Communication: The internet has transformed how we connect, share information, and collaborate.

- Transformation of Industries: AI and ML are redefining sectors from healthcare to finance, creating new possibilities and efficiencies.

Cons:

- Job Displacement and Privacy Concerns: The rapid pace of technological advancement can lead to job displacement and raise serious privacy concerns.

- Digital Divide: The disparity in access to technology can exacerbate existing social inequalities.

- Overreliance on Technology: An overdependence on technology may diminish human interaction and critical thinking skills.

Resources

- The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution by Walter Isaacson

Description: A captivating exploration of the individuals who shaped the digital revolution, from Ada Lovelace to Steve Jobs. - Turing's Cathedral: The Origins of the Digital Universe by George Dyson

Description: Dive into the fascinating story of the birth of the digital universe and the role of Alan Turing in its creation. - Code: The Hidden Language of Computer Hardware and Software by Charles Petzold

Description: Understand the principles behind computer hardware and software with this comprehensive and accessible guide. - The Computer: A Very Short Introduction by Darrel Ince

Description: Get an overview of the history and development of computers, from early machines to modern-day supercomputers. - AI Superpowers: China, Silicon Valley, and the New World Order by Kai-Fu Lee

Description: Explore the global impact of artificial intelligence and the race for AI dominance between China and the US.